You might have seen Anthropic in the headlines this week. We’re accustomed to hearing regularly from the major AI vendors about their latest product developments, but this was not announcing a new model feature or significant performance improvement.

Instead, Anthropic shared details about a long-feared but now real negative end-use of generative AI, large language models (LLMs), and their agentic capabilities: their application to information security breaches, hacking, and cyber-espionage.

State-linked attackers have reportedly leveraged Claude (Anthropic’s LLM) and its AI coding interface Claude Code to orchestrate a campaign of cyberattacks against “around thirty global targets”. These included “large tech companies, financial institutions, chemical manufacturing companies, and government agencies”. A subset of these targets confirmed breaches as a result of these activities.

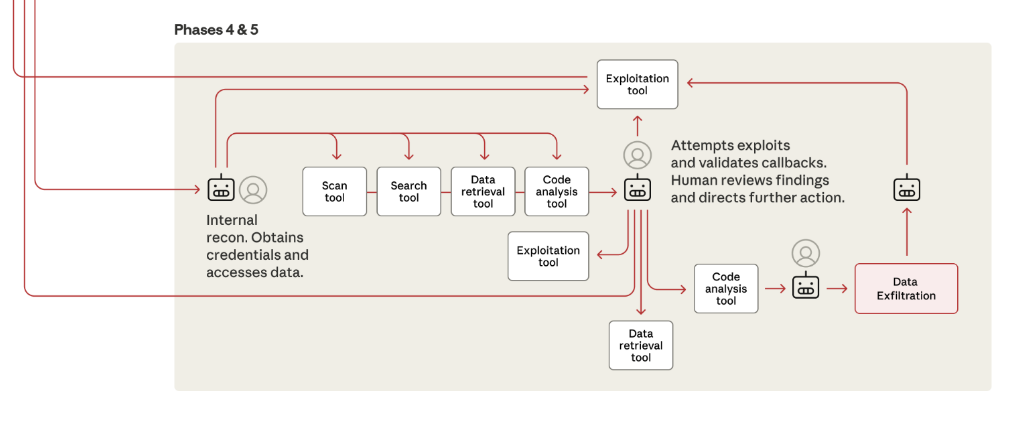

Anthropic have published a full review document on the incident, which is available on their website, and this provides a useful explainer for all aspects of the attack. This was a sophisticated operation with numerous steps and elements. However, one detail stood out to us at Cofide:

…Claude executed systematic credential collection across targeted networks. This involved querying internal services, extracting authentication certificates from configurations, and testing harvested credentials across discovered systems.

After obtaining a library of these credentials, Claude was then able to take advantage of any implicit or perimeter-based trust to begin moving laterally through the network. Crucially, it appears the LLM was able to chain authentication calls of enough depth to traverse through each target’s network topology to retrieve and exfiltrate sensitive data in a number of different architectures.

Lateral movement proceeded through AI-directed enumeration of accessible systems using stolen credentials. Claude systematically tested authentication against internal APIs, database systems, container registries, and logging infrastructure, building comprehensive maps of internal network architecture and access relationships

The report emphasises the shrinking influence of human operators in this process. Hackers were involved at a few key verification and intervention stages, but a significant proportion of the tasks were solved and orchestrated by the model itself. In fact, it could be argued an attack at this scale and scope would have previously been impossible without the aid of an LLM in agentic mode.

While we have no knowledge of the specific security posture maintained by the targets in this case, we can infer a few likely scenarios. The ability for an attacker to move laterally using a haul of pre-obtained compromised credentials points to several likely vulnerabilities: credential sprawl, legacy implicit trust-based network authentication, and long-lived secrets. Modern solutions to each of these problems exist (with standards like SPIFFE for workload identity and the SPIRE OSS implementation), but their configuration and deployment can be challenging at enterprise scale.

Whatever the hurdles to securing modern cloud architectures are, the risks involved with maintaining the status quo could not be more clear. We now have explicit confirmation that AI-harvested access tokens and credentials are being obtained and utilised in a semi-automated manner by bad actor agentic AI systems to devastating effect. As with the trends seen across all aspects of AI tools, we can only expect the sophistication and efficacy of these techniques to increase with time.

Perhaps most ominously: all this was made possible by a consumer product, available to anybody with a credit card.

The approach for mitigating such threats remains consistent whether or not an attacker is using the latest agentic AI. Employing a modern workload identity strategy addresses structural and technological vulnerabilities like identity sprawl, the potential for lateral movement, and long-lived secrets. This involves zero trust security fundamentals, like:

Cofide’s Connect platform solves for each of these: allowing you to switch off harvestable, long-lived credentials in favour of dynamic, auto-rotating short-lived identities from a modern workload identity fabric, built on open standards and deployable at enterprise scale.

Get in touch today to see how your organisation can start its zero trust journey, and keep out the bad actors (human or machine).

Talk to us for a demo and join our early access programme.